State Space Analysis from the Player Perspective: Complexity and Exploration

Series: State Space Analysis

Note: This is the first in a four part series that builds off a previous piece I wrote regarding state space representations of video games. I would recommend you read that piece first.

In my previous piece, I laid out a framework for modeling games as “state spaces,” or minimal representations of the game’s variables at a particular time during play. In the context of this post, I focused on developing a state space for any given game in the abstract, effectively creating a perfect representation of the game’s mechanical interactions. However, doing this in practice is effectively impossible; even highly truncated state spaces for a given game are vast and difficult to document accurately. This follow-up series will discuss how players perceive the state space of a given game and how that influences the way we characterize said games.

A Review of Key Terms

But first, let’s go over the key takeaways from the previous article.

The compositional ontology.

The compositional ontology.

Firstly, I have a compositional ontology that I tend to call back to in order to organize what exactly in the game we’re talking about at any given time. The three main elements of every game are as follows:

- System: A game’s system consists of all of the entities in the game and all of its rules that manage interactions between entities in the game.

- Scenario: A game’s scenarios create contexts for the system’s entities to reside in. Each one may implement different sets of entities in differing quantities.

- Structure: A game’s structure sequences scenarios in the order for the player to interact with them in. This can not only include basic sequences but also advanced ones such as randomly generated scenarios, random sequences, or scenario repetition.

Next, there are key elements of the state space analysis that we need to understand in order to continue with this particular article:

- State: A state is a collection of variables that make up a representation of the game as a whole at a particular moment in time. In our analysis, we’ve condensed and altered the set of state variables down to only what is essential to understanding what is happening in the game mechanically at any given time.

- Action: An action is a way that the player can influence the game based on the mechanics provided within the aforementioned system. In a practical context, this is probably a particular input you make on the controller.

- Transition: A transition between two states occurs when an action is performed in a certain state. The rules of the system will ensure that the state variables update in such a way that the game transitions from the initial state to an outcome state.

Complexity and Player Exploration

Our initial concept of the state space is one of its ideal, purest form: a perfect state space containing every feasible state across the entire game and all of the requisite transition paths between them. Perhaps you have some simple games where you can comprehend the entire state space, and perhaps if you have extremely intimate knowledge of a particular game you may be able to sketch out something close to the ideal in your head. However, the complexity of many games (even simple ones) and the amount of time it would take to search through the state space limits the amount of the state space that any one player can understand. Thus, we perceive games through a subset of their state space.

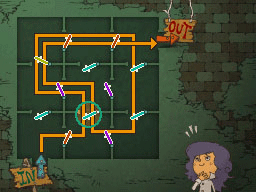

In this puzzle from Professor Layton and the Diabolical Box, we can distinctly see all of the different states and transitions between them at a glance; this is not the norm for many other games we play. [src]

In this puzzle from Professor Layton and the Diabolical Box, we can distinctly see all of the different states and transitions between them at a glance; this is not the norm for many other games we play. [src]

Recall our breakdown of complexity from the previous article. We determined that the cardinality (or total count) of the total set of states denoted standard complexity and that the summed degree across the total set of states (or the total count of transitions) denoted interaction complexity. If we consider what these terms mean in practice, complexity will give insight into the range of each of the states and what states for each variable are valid at any given step in time, and interaction complexity will give insight into the ways states can flow between each other, what actions are viable at any given time, and how situations may evolve if left untouched. Therefore, we should construct two subsets of the overall state space for each individual player: a player’s perceived state set and a player’s perceived transition set. These cover their understanding of the complexity and interaction complexity of the game, respectively.

When a player begins a game for the first time, they are likely unfamiliar with the game’s scenarios; they may be familiar with the broad strokes of the system from previous iterations of the game, but let’s assume that each game has different scenarios. As the player plays more and more, they will invariably encounter new scenarios that introduce fresh states for the player, thus increasing the size of both their perceived state set and perceived transition set. Both of these should continually trend upwards over productive play. There is a chance that the player may perceive a state or transition that does not exist in actuality and later rectifies their understanding later, decreasing one or both sets, but we’ll assume for now that the player perceives the game “rationally” in the sense that they see the game’s states and transitions for what they are and not for how they are perceived on an individual level; an uncomfortable contradiction for now, but one we will return to eventually. What we seek at the moment is how one increases their respective perceived state and transition sets.

The two perceived sets, states and transitions, each contain separate elements that interact with each other. States only take meaning when they are put in context as distinct from other states, and thus they need transitions in order to provide connective pathways between each. Transitions only take meaning when they beget consequences, and thus they need both initial and outcome states in order to provide context for the action (or inaction) that they represent. The existence of both is intertwined, and players will undoubtedly discover elements of each set simultaneously, especially in moments of floundering or button mashing. However, the strict methods of exploration for each set are different due to the distinction in the two elements and what they represent.

State Exploration

A simple example of state exploration.

A simple example of state exploration.

When considering exploration of a particular one of these elements, let’s assume that the player is approaching exploration in a structured way. For a player to experimentally explore the total set of states and expand their perceived state set, they would need to hold all other elements as constant; in this case, the transitions. Therefore, we should consider state exploration to be when a player uses a known transition (or subset of actions) in order to discover new states; this is a classic independent variable and dependent variable construction.

An intuitive example of this would be walking around an open area within a game. Let’s say this is some open-world game, with some sort of fundamental movement action (such as walking) and objects to encounter separated by some distance. To explore the space, one would repetitively execute the walking action (or more likely, many different walking actions in different directions) and enter new positional states. A slightly more abstract example would be repeatedly trying a certain attack on an enemy to see changes in hit reactions. In both of these cases, the action is already known and is applied in order to detect new states. Note that the action does not represent the transition one to one: the transition is representative of the action’s use within a particular context. This relation mirrors that of the system and the scenario, where the system represents steadfast rules that the game abides by (including the player’s action set in the abstract), and the scenario provides contexts for those rules to be applied in. States and transitions are the crystallization of those ideas into the world of our state space analysis.

In this relation a subtle contradiction resides: the transition is known to the player through their knowledge of the action, but its precise consequences (or outcome state) will not be known. A full understanding of the transition cannot be grasped in this instance, despite the fact that the player is attempting to hold it as known and constant in the course of their state exploration. The consequences may be guessed at through the player’s application of the same action through previous, similar states, or rather, their previous state exploration, but as the transition is defined and derives meaning from its consequence, it will remain translucent to the player; the outcome dimly visible but not clear beyond the shadow of a doubt. This also ignores the player’s knowledge of the initial state. At a particular moment in time when one executes a known action in order to explore a new state, it is absolute that they must know the initial state, as they currently exist in it. However, on a longer scale of time, the player may plan to continue repeating that action with elusive and estimated consequences in the future, having no knowledge of the initial state or outcome state of any of their actions. Therefore, with only a knowledge of the system and the scenarios they’ve seen before, the player pushes on to the unknown wilderness of scenarios to come, ensconcing their actions as particular transitions along the way.

Once a state has been perceived by the player, it will remain in the player’s perceived state set from there-on out. The state itself has no motion or evolution without transitions to provide them, and thus there is nothing new to explore from the perspective of a stationary state once it has been explored previously. Therefore, for a particular transition that has been taken by the player and contextualized by its initial and outcome states, there is nothing new to discover. The process of state exploration sets its transitions in stone, barring them from ever being freshly perceived again. This is because transitions have no real state of their own, and thus depend solely on the stationary bookends of the states it transitions between to be provided with character.1 For one to continue exploring, they cannot revisit the same transitions they have already tried. The game’s scenarios must perpetually provide new states for the player to experience in order to keep the wheel of state exploration turning.

An example of a game with a structure that uses templated scenarios interwoven into a larger world scenario is Far Cry 3. [src]

An example of a game with a structure that uses templated scenarios interwoven into a larger world scenario is Far Cry 3. [src]

From the perspective of the structure, creating significant state exploration will likely involve creating as many scenarios as possible. Theoretically one could craft these by hand, but more practically one may create scenario templates to alter and repeat. As an example, in an open-world game many different scenarios sit in fixed and contiguous positions, with each scenario drawing from a template with its own parameters, such as outposts dotting the environment in a modern Far Cry game. Alternatively, the scenarios may be randomly generated at runtime from templates, such as in a roguelike game. Here the states are not persistent, putting the player in a position such that they may not have the opportunity to do anything but perform state exploration. Conversely, if the player gets to the point that they have states in their perceived state set replicated by the random scenario generation, they may not have the opportunity to perform state exploration if they so desire.

From the perspective of the system, a system that differentiates between granular states will result in the ability to perform state exploration even with a constrained scenario set due to nuance of the game’s various state variables. In a highly dynamic system, actions driven by the system (random or otherwise) may cause the state to evolve over time without action from the player, which will result in state exploration but without the player’s guiding hand. This kind of dynamism can most often be seen in games with AI opponents, especially when there are multiple AI opponents at once and their decision-making capabilities are independent from one another. For the player to influence the state exploration process in a system with granular states and constrained scenarios, they will likely need a wider action set to work with. The easiest way to do this is with “analog” controls that distinguish between different similar actions based on the intensity of the input. The perfect fusion of a system that creates many unique states and also has a large action set arises from games with meaningful physics systems, such as racing games with analog throttle and brakes, or rolling marble games with sensitive input for moving the player entity or tilting the playfield.

Both of the above perspectives result in games with high complexity according to the definitions of our state space model. However, the outcomes for the games they define are radically different. When working from opposite sides of our compositional ontology and implicitly decreasing the effect of the other side, our games approach complexity in remarkably different ways that reflect the attributes of their dominant layer; structure for the first perspective and system for the second. When structures get more complex, they add content through their quantity of scenarios. When systems get more complex, they add nuance when applied within any scenario.

Transition Exploration

A simple example of transition exploration.

A simple example of transition exploration.

In state exploration, we held transitions as constant in order to find new states; with transition exploration, we will do the opposite. The exploration of transitions requires holding the states as fixed, and as such we assume they are already known to the player and contained within their perceived state set. If a transition is an action contextualized by its initial and outcome states, then the player conducting transition exploration knows of these two states already and seeks a new way to transition between them.

Here the subtle contradiction discussed regarding transitions and their distinction from actions resolves: the player may know their action set as in state exploration, but they do not know the consequences of particular actions within a particular context. Therefore, with a desired consequence in mind, they can set out to test each element of their action set within a starting state with the goal of achieving a particular end state. An example of this would be testing hit reactions on an enemy in an action game. If the enemy is in a neutral state, and the player wishes to move them into some sort of hitstun or stagger state that exists within the system of the game, they can try various actions from their overall action set in order to achieve that transition. This is effectively the inverse of state exploration, not only in the elements that are being held as fixed, but also in terms of the parameters of the transition that are unknown at the outset. In state exploration, we knew of the action we wanted to take but not the explicit consequence (and thus outcome state) of said action, only an assumed consequence. Here we have an exact consequence that we would like to make true but do not know the action that it is required to make the transition work. Of course, the player may assume what action will work to achieve the particular consequence that they desire, but this bleeds back into the concept of state exploration, which for the moment we would like to hold as a separate mode of play. We will come back to their unitary form shortly.

Resident Evil 4 is a game with a variety of different hit reactions. One could sit in a state and test different actions (shooting at different angles) in order to see which actions will transition into a particular hit reaction state. [src]

Resident Evil 4 is a game with a variety of different hit reactions. One could sit in a state and test different actions (shooting at different angles) in order to see which actions will transition into a particular hit reaction state. [src]

The key difference here compared to state exploration is that the states, the static elements of our game associated with moments of being, are fixed and known, which, based on our assumptions on how the player plays and how they explore, indicates that they have been explored before through the process of state exploration. This means that they have already been visited, and thus that transition exploration is a process of revisiting states in order to recontextualize them. In the previous section I noted that states cannot be freshly perceived again once they have been explored. This is correct from the isolated view of the state on its own, either viewed as a singular moment or one of many moments chained together by a rote action. What transition exploration does is provide a new angle with which to understand how the state relates to other states. Much like how a transition is contextualized by its initial and outcome states, providing moments between which the transition provides a flow, a state is contextualized by the ways in which it can flow to other states and other states can flow into it, each one solidified as a transition within our model.

However, note the use of plurals in that description: the number of transitions in and out of a state are not fixed, whereas a transition has two fixed states it moves between. The issue of how a state cannot be freshly perceived repeatedly in the process of state exploration has less to do with the characteristics of the state itself and rather the way that freezing the types of action used in exploring states limits the ways in which they can be recontextualized, as the transitions that arise from the use of those actions will remain locked between the states they flow between.2 A state can be only perceived once on its own through the process of state exploration, but through the process of transition exploration we can repeatedly recontextualize the state through the multitude of transitions where it serves as the initiation. If we set both types of exploration together in a causal chain, we can glimpse how the process of overall exploration works: the initial discovery of a state takes place through the process of state exploration, and once it is included in the perceived state set, we can repeatedly revisit the state in order to discover new transitions and add them to our perceived transition set. One can only explore a state once, but one can use that state to explore transitions many more times than that.

We can consider this as a form of replayability when put in opposition to state exploration, seeing as one will repeatedly revisit the state throughout the process of conducting transition exploration. Where state exploration is concerned with constantly finding new moments in the game to experience, transition exploration is concerned with reconfiguring moments to have different outcomes. Creating significant transition exploration then requires many transitions coming in and out of each state, or as described in our lexicon, high flexibility (the average number of transitions coming out of each state). The most straightforward path to doing this is to create a highly sophisticated system that allows for the abundance of different rules and actions that will form the nucleus of each transition; with this foundation, all of the scenarios that implement a given system will derive large numbers of transitions from the system.

In state exploration, the primary focus was on states as implemented by the scenarios, and thus we could look at how the exploration was altered from the perspective of both the system and the structure, as both layers of the ontology interact with the scenario layer. However, when discussing transitions and the system they are derived from, there is no ability for us to look at how the structure influences transition exploration, as the structure does not influence the system in any way at all. From a developmental perspective, there may be influences between the two; if the structure of a game is to grind quests (scenarios), a material subsystem may be implemented in order to facilitate that. However, with the elements we define as part of this model and observe after the design process has been finished, the structure cannot influence the system. The structure purely sequences and provides scenarios to the player, which are fundamentally collections of states. However, the structure does not control how the states transition to one another; if it switches the underlying system3, then it does so by providing a new scenario overlaid on top of that system, not by providing the system to the player with no states to exist in. Thus, while state exploration can be provided through alteration of any of the three layers, transition exploration can only come out of alteration of the system and the scenarios.

The thermanator sections in Ratchet & Clank: Going Commando severely limit the amount of flexibility by featuring few other entities on screen and restricting the player to a weapon with very little in the way of ammo or aiming considerations. [src]

The thermanator sections in Ratchet & Clank: Going Commando severely limit the amount of flexibility by featuring few other entities on screen and restricting the player to a weapon with very little in the way of ammo or aiming considerations. [src]

From the perspective of the scenario, states need to be provided that actually leverage each of the existing rules and actions of the system. If one were to make a complex combat system but then provide scenarios using enemies that don’t respond to many of the actions of the player, the interaction complexity and thus the ability for transition exploration will be degraded compared to scenarios using more responsive enemies. This is to say that we may assume that the game’s flexibility is not indicative of all states and that some states will have more transitions coming in and out of them than others. Thus, a scenario aiming for significant transition exploration should focus on states that also feature a large amount of transitions. This might also indicate that the system itself needs to be pruned of entities and rules that decrease flexibility. Of course, this may not always be desirable, as it may increase the efficiency of certain strategies or create mass strategy viability in a way that contradicts the game’s primary design goals. Puzzle-like games with a focus on limited viable paths to the win state may be less interested in flexibility (and thus transition exploration) than dynamic games.

It is also worth noting that transition exploration requires the player to actually be able to revisit the state. Combat scenarios make for a strong way to think about transition exploration because they frequently involve returning to some neutral state. For something like the racing game example given as part of the state exploration section, returning to a previous state may be only possible under a lap model, rendering it effectively impossible in something that uses a rally rule set. Still, the frequency with which one could recur a particular state may be on the order of a few seconds in a combat scenario compared a lap repeating within a few dozen seconds or even a couple minutes in a racing game. Theoretically this is an issue the structure can actually influence by providing replays of given scenarios; however, all of these issues could be solved at the system level (e.g. providing a restart action and rule sets based around multiple laps) and at the scenario level (e.g. providing a race with multiple laps). At a root level virtually every game could be replayed from start to finish even if it had a linear and non-repeatable structure during a singular playthrough, so in practicality the point has to do more with how frequently one can recur rather than if one can repeat a state in the first place.

Unlike in the state exploration example, I see no particular duality in how one approaches increasing interaction complexity and allowing transition exploration. Herein lies another key difference between states and transitions: states can arise from developments at any layer of the compositional ontology, as they reside in the scenario, but transitions can arise only from the interaction of the system and the scenarios. The structure operates on states in bulk, shifting them around and replicating them to match the needs of the developer. At a coarse level it may affect the transitions between different scenarios, but it cannot control the individual states in those scenarios that serve as the outcome of a transition from a different scenario, nor does it control how those transitions were defined. Transition exploration will always be system-driven and to some extent scenario-driven, and the two act in harmony. The same increase in system nuance that increases complexity will also increase interaction complexity, and scenarios must be designed to center states that feature high number of transitions.

A Unitary Exploration

Let’s say we are partaking in transition exploration. There’s particular initial and outcome states we want, and we have a selection of actions to use in order to find transitions between the two. We choose one of the actions and execute it, but it takes us to a new, previously unperceived state. In the original moment, we were conducting transition exploration, but look at our current game elements: we’ve chosen an action without understanding the consequences and discovered a new state in the process. This is effectively state exploration! In the process of trying to conduct one kind of exploration, we’ve morphed into the other kind seamlessly.

A more complex example, now with a set of states that are already in the perceived set (including the initial and outcome states) and a set of states that haven’t been perceived yet. You could also judge for yourself what transitions have been perceived and which haven’t; for this hypothetical I didn’t bother labeling them.

A more complex example, now with a set of states that are already in the perceived set (including the initial and outcome states) and a set of states that haven’t been perceived yet. You could also judge for yourself what transitions have been perceived and which haven’t; for this hypothetical I didn’t bother labeling them.

We can now consider the process of exploration as a unitary process that ebbs and flows between state exploration and transition exploration. If we were to flip the above case, we might be conducting state exploration before finding ourselves in a particular state that we think might lead to another state we’ve already been to. At this point, we can then conduct transition exploration to try to get to that latter, pre-visited state. Picking a transition that takes one to a different, previously unperceived outcome state would then result in state exploration, and so on and so forth. The two processes are intrinsically linked.

An important distinction between the two here is that state exploration can become the accidental outcome of intended transition exploration, but purposeful state exploration can never transform into transition exploration without explicit intent from the player. This is because state exploration occurs when the outcome state is not truly known, while with transition exploration the player must explicitly mark a desired outcome. This is one method we can discern between the two modes now that it’s clear that they’re constantly in flux with one another: examine an action based on whether the player intended a particular outcome or not.

If we then discard our assumption of the player rationally and perfectly engaging in the exploration process, we can then see other potential ways that an exploration situation can unfold:

- The player could try a new action in transition exploration and end up in an unintended state that had already been perceived, including a return to the initial state.

- The player could use an action in a newly perceived state in state exploration and end up in a state that had previously been perceived.

- The player could use a previously perceived transition to get from one state to another that both reside in the perceived state set. We can refer to this as “backtracking.”

We can see that these top two themselves are similar to transition exploration in that they discover new transitions between states in the perceived state set, but they differ from our previous understanding because they are not conducted in an experimental fashion. Even in the first situation, while transition exploration is being performed, the new transition is an accidental byproduct; perhaps a useful one, but not necessarily one that solves the problem that was being tackled. This idea of accidental versus purposeful transition exploration is put into direct contrast against state exploration, which has no such distinction. As the outcome is never concretely assumed, state exploration is identical whether done as the accidental result of another conscious process or as an intentional process on its own. This fleshes out our previous idea of the two being distinguished by their intentionality; not only can they be characterized by whether the player intended a particular outcome state, but this characterization gives added nuance to how transition exploration is determined, while state exploration maintains its character no matter the circumstances.

Additionally, so long as the player conducts transition exploration, they must continue to return to the initial state in their attempts to find transitions to the desired outcome state. This indicates that for transition exploration to recur, if the action taken results in a transition and said transition does not loop back into the initial state, the player must engage in these other activities to get back to the initial state. The process of transition exploration over a period of time will inevitably force the player to conduct state exploration, backtracking, and potentially unrelated transition exploration in order to repeatedly return to the initial state. Again, this is another indication of the intentionality required to undertake the pure transition exploration process; it requires dedication to the objective. Conversely, state exploration can easily repeat itself thanks to the states themselves being transitory as part of the process; one can wander idly and still find new states with no particular objective in mind. With the unitary view of exploration, we can now not only see how the two styles that we delineated between earlier work hand in hand with each other, but we can also see more clearly the distinctions in how they operate and how the player must conduct them.

Analysis

This dialectical unspooling of the concept of exploration has been abstract due to the way it builds out of the rudimentary fabric of our state space analysis; this was my explicit intent, as I wanted to create the underpinnings for these ideas in a bottom-up way using fundamental building blocks rather than approach it top-down with high-level, empirical evidence (although of course this approach has its place). If you’re reading this, you’re likely part of a group that’s sought to understand complexity in games, and while analyzing it in the abstract has its merits, engaging with a game is an evolving process. Our state space analysis provides the elements we need for describing a game, and this exploration analysis now adds a new dimension of progressive growth from the player’s perspective. We do not and cannot perceive games in the former analytical manner; we learn, we grow, we make connections, and we build our understanding of a game from the elements we perceive of it, and thus this analysis has provided an extrapolation of the rigid, ideal elements into an environment where not everything is known and the game’s inner workings are still blurry to the player. With this in mind, let me provide some ways to apply this analysis and mode of thinking to the way one approaches play and critique regarding game complexity:

-

When playing a game, how often do you feel as if you are engaging in exploration of new places and things you haven’t seen before (state exploration) versus how often you reengage with things you’ve previously experienced to learn new ways to navigate them (transition exploration)? What would you eyeball the ratio as? If the game has multiple systems of gameplay, how does each one differ in this comparison? Do certain scenarios throughout the game favor one over the other?

-

When engaged in state exploration, how granular are the states? Are the states provided to you through an abundance of scenarios, potentially driven by structural elements, or are they driven by the gameplay system? When playing a game with state bulk instead of state granularity, how are the scenarios delivered to the player? Are they provided in such a structure that scenarios repeat often? Are the scenarios procedurally generated? Do the scenarios feel as if they provide new experiences for you, or are you exploring the new states purely because there are new states to explore? If the states feel granular and nuanced, what variables are providing that granularity? What rules and elements of the gameplay system provide the ability to move between many different states? Does the sheer amount of states create many viable paths to reach a win state, or are many of the states outside of the realm of viability?4

For Sega Rally Championship, I would argue the nuance in the handling, vehicle dynamics, terrain conditions, and elevation combine to create a lot of states from a system perspective, even with its limited arcade structure. [src]

For Sega Rally Championship, I would argue the nuance in the handling, vehicle dynamics, terrain conditions, and elevation combine to create a lot of states from a system perspective, even with its limited arcade structure. [src]

-

When engaged in transition exploration, how often do you find many ways to transition between two separate states (flexibility)? What elements of the system provide you that flexibility? When you move between scenarios, which give you the ability to flex your knowledge of the many transitions possible between states, and which are more rigid? How does the game enable long-term transition exploration through its replayability mechanics? Is it difficult to recreate a previously held state, or is it easy? If you were to fail in this process and end up in an unintended state, does the game hold you accountable for your decisions and force you to continue, or do you have ease of access to return to the first state? What is the timescale that transition exploration exists on for you?

-

What is your process for switching between the multiple expressions of exploration? Do you find yourself to be a player that “goes with the flow,” (state exploration) or when you lock into a certain concept do you set objectives for yourself and try to fulfill them (transition exploration). Do you make assumptions on the outcomes of your actions, and if so, how often are they correct?5 Do you find that the game encourages a particular style of play? Do you find that different genres change the way you play or your mental process surrounding it?

These are not exhaustive; I would hope that your particular read on this article provides you with your own new avenues for examining the games you play and how you go about playing them. These are particular questions that come to my mind when I play games, and I’ve drawn them from specific ideas I’ve laid out in the primary parts of this article.

Conclusion

We now have a framework for understanding the process of a player’s evolution with a game, which is a complimentary framework to our state space analysis on the ideal of how a game should work. We’ve sequestered two separate concepts of how one explores within a game, and we’ve united them into a singular idea of exploration in the process while also detailing what makes the different modes separate. We’ve also concocted a list of questions and considerations for how we can apply this both when we’re understanding how we play and when we’re practicing critique.

Now we’re ready to shed our next assumption, which is that the player can actually perceive these states and transitions directly. While in some cases a game might directly reveal quite a significant portion of its state, in many cases a game obfuscates its state to the player. Instead, representations of the state are modeled after real or aesthetically legible constructs as to provide an abstraction of state variables for the player. My next article will be covering this phenomenon (hopefully in a more empirical fashion, my apologies to those who dislike the philosophical bent of this particular piece).

-

Sicart provides for us a definition of mechanics adjacent to ours of actions within the system in “Defining Game Mechanics”: “[G]ame mechanics are methods invoked by agents, designed for interaction with the game state.” The view I submit above is more abstract, without definite agents, but generally we agree that actions beget new changes in the state, here listed as mechanics, and in my work ensconced within the transition. Further below he builds out this definition by discussing contextuality within an ontological distinction between “mechanics” and “rules” and uses the following example of Shadow of the Colossus’s climb mechanic and stamina rule as an illustration: “A property/rule states that if stamina is below a certain threshold, climbing is not possible anymore. The game loop checks the game state; if the player invokes the climb mechanic, those functions that determine the consequences and boundaries of the players’ interaction are called, and the resulting changes in the game state are evaluated against the rules of the game.” We must be precise here: while an action in our understanding will have an effect on the literal state of the game as a program (perhaps flipping a bit or propagating a communication packet), the abstract game state will not update until this game loop has finished processing. A failed climb with no stamina will change the processor’s state as it churns through the instructions necessary to handle the execution of the rule, but it may not actually affect the abstract state if the rule does not cause the relevant game variables to change. This is to say that at a further level of granularity transitions do represent changes in state, but in our process of abstraction we’ve removed their ability to characterize them that way. ↩︎

-

As a note: transitions could theoretically lead to multiple outcome states if there are random elements involved. Björk and Holopainen digest this issue in “Describing Games: An Interaction-Centric Structural Framework” by adding an additional processing step: actions in their framework trigger “events,” and events with complex processing are handled by “evaluation functions” that update the state. The depth of the entity that an evaluation function contains is a bit too rich for my liking, and I’d rather keep our simple graph foundation. Instead, I would define separate transitions out of a single action and initial state that are randomly determined, where there is some probability that one transition happens based on the input parameters versus the other(s). This is similar to a Markov Decision Process without the reward capacity, as we aren’t particularly concerned with using machine learning to “solve” our model, partially because the topic has already been investigated before. In fact, if you were to scroll through the other articles published in IEEE Transactions on Games, you’ll find very little that doesn’t involve reinforcement learning topics (except for, of course, procedural generation topics). ↩︎

-

I think it’s worth positing now that a game could theoretically implement multiple disjoint systems; in fact, I would say this is the heart of many minigame-driven franchises such as the Like a Dragon series. This is the reason for the precision of the language a bit before this, where I wrote “scenarios that implement a certain system”, rather than just blindly saying scenarios draw from the system. I build off this assumption here. If I am playing Like a Dragon, the game is structured in such a way that when I begin the game, I enter into an exploration scenario. The exploration scenarios can feed into several other different kinds of scenarios, such as gambling, bowling, combat, etc. Kamurocho here would be a scenario, structurally determined to have outlets for the aforementioned minigames, with the exact position of transition into those minigame scenarios determined by the design of Kamurocho. The structure can’t say that a particular system is put in one of these outlets, because systems are immaterial without being expressed through a scenario. As mentioned before, this is a mirror of the immateriality of transitions versus the materiality of states. Likewise, the system has no view into what the structure does because it has no concern whatsoever for defining states, which are the sole things that the structure can manipulate. ↩︎

-

Building upon the basic structure of prime paths from our previous article, which are states and transitions connected together in a sequence without loops (usually to an end state or “win” state), we can extrapolate a “perceived path set,” which creates as many prime paths as possible from the elements within our perceived state and transition sets. This allows us to also assess perceived viability, efficiency, complexity, etc. from the ideal versions of those metrics we discussed in the previous article. When your friend says some move is useless and nonviable in a particular game and you correct him with an explanation of why it is viable, the contradiction between your two separate sets of perceived game elements are colliding. You are discussing the game as an ideal entity between the two of you, but the segments of the game that you have actually perceived are likely to be different. ↩︎

-

I’ve handwaved the idea of assumptions about yet-to-be-perceived states and transitions a fair bit in this article. The primary reason for this is that we’re still locked into this abstraction where we assume the player actually can directly process the states and transitions that are taking place; this is obviously obfuscated from the player in most cases. This will be dealt with in future articles in this series. ↩︎

Comments

You can use your Bluesky account to reply to this post.